Lauren Xie

Developing an Open-Source App Scraper for Non-Technical Researchers

Collecting data is difficult, especially if you're clicking through a massive amount of apps on the Google Play Store just to look at metadata. Now, if you can write a program to automatically parse the data on the page, collecting data would be relatively easier. This, however, requires some knowledge and experience with coding, and if not, requires monetary compensation for a third-party resource.

Our mission for this project was to create an easy-to-use open-source tool non-technical researchers can use to easily collect app data without devoting a copious amount of time or spending large sums of money. By creating this resource, we are expediting the research and analyses drawn to better our understanding of how apps impact our daily lives and how we can improve.

Some Background

What even was this project?

As I mentioned above, the goal of this project was to develop an open-source program that non-technical researchers can easily use to scrape the Google Play Store for app metadata to supplement their research. This project was completed within the span of 1 academic year (September 2022 - June 2023) and consisted of 1 10-page project report and a final presentation which was judged by a panel of 4 computer engineers.

For our final product, we created a downloadable open-source executable with a graphical user interface in which users can easily scrape and retrieve app metadata from the Google Play Store. We used the open-source facundoolano Google Play Scraper as a base for our product and built upon it for a better user experience.

My roles for this project

Project Lead

I led team meetings and project decisions as the main contact and liaison between external stakeholders and my team to communicate school deadlines.

Project Manager

I led the division of tasks and created a roadmap for project completion and deadlines. I also managed the budget and led sprints and retrospectives.

UX Researcher and Designer

I created the user interview protocol, conducted the interviews, and designed and wrote the UI for the tool using HTML and CSS.

I held many positions over the course of this project and I take pride in each one! However, I want to specifically introduce you to my tasks as the UX Researcher and Designer. Continue reading to learn more about my UX research or click here to read about the design work I did for this project

Users, users, and most importantly... users!

UX Researcher

Before diving into development, I needed to research the existing tools and users' reactions to them to understand what areas we can build to improve the process of app scraping and metadata reviewing.

Initial Research

First, I researched the existing tools to grasp the process as a user, focusing on one particular open-source scraper: facundoolano’s Google Play Store app scraper. To do so, I downloaded the program from GitHub, ran each function using mental health as a parameter, and cross-referenced the data I got with the information I saw on the Google Play Store. This gave me first-hand experience with how a user might feel using the program.

The single line of installation code which actually doesn't work. I had to use an external YouTube tutorial to learn how to install the program

The JaveScript (JS) file the user needs to create to run the search. The user also needs to write JS code for the program to work. While the code is given, for modifications and specific requests, further understanding of JS is needed

The results are outputted in the command line. It is uneditable and difficult to read.

From my research, the facundoolano Google Play Scraper was difficult for non-technical researchers because of its heavy reliance on one's understanding of coding, specifically in JavaScript, and in the command line. I found this out by analyzing the steps that required coding knowledge from the perspective of someone who knows nothing about coding. I looked at things like technical jargon in the JavaScript or command line code, or external programs or code the user has to use and found that every step required some level of coding knowledge.

User Interviews

One critical pain point I determined was that researchers were wasting time copying and pasting the clumps of JSON data into a spreadsheet. These findings were echoed in the results from user interviews with researchers who either scraped app data before or were currently scraping app data. I interviewed 4 researchers over Zoom to understand the pros and cons of each stage of scraping app data by following an interview protocol I created to ensure each interview followed the same format for accurate results.

I made sure the interview protocol asked unbiased, open-ended questions to not taint user responses which would shift our understanding of our users' needs. Before interviewing users, I reviewed the interview protocol with my team, my advisor, and Kelea Liscio, a Senior UX Researcher at Google, to receive feedback on the interview protocol and ensure it is unbiased.

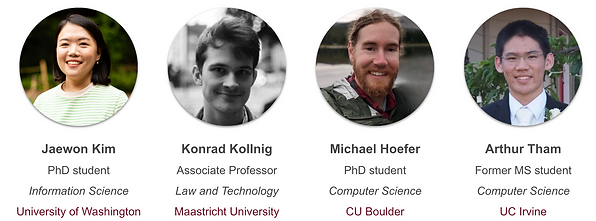

Arthur Tham

Former MS Computer Science UC Irvine

We spent a lot of time learning Node.JS (and JSON) more than we actually looked at the app stuff - like the data we got back.

about his research and paper: A content analysis of popular diet, fitness, and weight self-tracking mobile apps on Google Play

Pain points of wasted time due to technical knowledge barriers like Arthur's experience were also highlighted when the researchers discussed how crucial spreadsheets are for modifying data and making notes, and how the tools today do not have an easy way to export the data in spreadsheets, especially for large datasets of thousands of unique data. The researchers described laborious efforts to create their own programs to parse the uneditable JSON object into versatile spreadsheets. I also found that researchers, regardless of technical knowledge, would prefer an intuitive interface for scraping data rather than writing code because a simple click of a button saves time compared to writing 20 new lines of code for the same result.

Importance of User Research

These findings informed the team of exactly which features are the most important to our users, and guided our project direction and progress. For our users, the most important aspect of our tool is convenience because the bulk of our user's time should be analyzing, sharing ideas, and reviewing, rather than gathering the data. From user research, we learned that whatever we make, our product must save researchers time, energy, and money.

Easy and intuitive is the goal

UX Designer

As the UX designer, my main task was to design and create the front end for our project. To design, I designed a prototype of what the user would see in Figma and created the user interface using HTML and CSS based on the design prototype. Click here to see my Figma prototype!

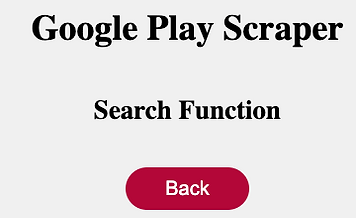

Feature Selection

I decided to focus on a design that is transparent and intuitive so I initially used a radio selection for the user to select which feature they want to use. When creating the final version, I decided that the radio selection and the next button added extra unnecessary clicks so I changed the interaction so that when a user clicks directly on a feature, it will take them to the respective page. Each page all have back buttons so if a user changes their mind about a feature, they can return to the home page at any time.

Prototype of home page

Final version

Colors

Regarding the colors, I used the colors of our school, Santa Clara University (SCU), to show our association with the school even though our product is not a for-profit item.

For links and buttons, I used the official brand red of SCU and any time a user hovers over the link or button, the color will change to the official secondary mustard yellow.

Regular button

Hovered button

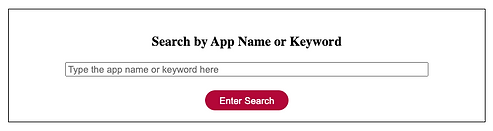

Using a Feature

Diving further into the hierarchy of the prototype and the different features, I wanted our product to be simple to use, so for functions like searching for apps within a field or topic, users only need to type the search term, like mental health or journal, into the search box.

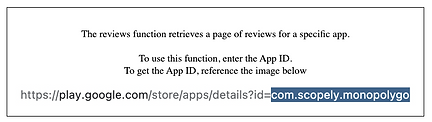

For other functions like reviews that search for data within a specific app, reviews of Instagram for example, I kept the original parameter of using the app ID from the URL to request data by the app.

I kept this parameter because we were using the original open-source facundoolano tool as our base and didn't believe that developing a new method of using app IDs was in the scope of our project. However, because this request is a bit confusing, I included a sample image for users to refer to if they are confused about what is needed to run the feature.

I later learned, from feedback during a user testing session, that an easier method for users would be to copy the entire app URL and search with that. This method would be easy to implement and intuitive for users because by adding a couple of extra lines of code, the program can cut out the unique ID from the URL to use rather than having the user do it.

Technical Presentation

Below is the technical presentation my team and I gave that won us 1st place among a group of 5 groups, all in the HCI discipline. This presentation goes over what our product, goal, process, development, and findings are.